Note

Go to the end to download the full example code or to run this example in your browser via Binder.

Challenge 1: Cross-Task Transfer Learning!#

#

Preliminary notes

Before we begin, I just want to make a deal with you, ok? This is a community competition with a strong open-source foundation. When I say open-source, I mean volunteer work.

So, if you see something that does not work or could be improved, first, please be kind, and we will fix it together on GitHub, okay?

The entire decoding community will only go further when we stop solving the same problems over and over again, and it starts working together.

How can we use the knowledge from one EEG Decoding task into another?

Transfer learning is a widespread technique used in deep learning. It uses knowledge learned from one source task/domain in another target task/domain. It has been studied in depth in computer vision, natural language processing, and speech, but what about EEG brain decoding?

The cross-task transfer learning scenario in EEG decoding is remarkably underexplored compared to the development of new models, Aristimunha et al. (2023), even though it can be much more useful for real applications, see Wimpff et al. (2025), Wu et al. (2025).

Our Challenge 1 addresses a key goal in neurotechnology: decoding cognitive function from EEG using the pre-trained knowledge from another. In other words, developing models that can effectively transfer/adapt/adjust/fine-tune knowledge from passive EEG tasks to active tasks.

The ability to generalize and transfer is something critical that we believe should be focused on. To go beyond just comparing metrics numbers that are often not comparable, given the specificities of EEG, such as pre-processing, inter-subject variability, and many other unique components of this type of data.

This means your submitted model might be trained on a subset of tasks and fine-tuned on data from another condition, evaluating its capacity to generalize with task-specific fine-tuning.

Note

For simplicity purposes, we will only show how to do the decoding directly in our target task, and it is up to the teams to think about how to use the passive task to perform the pre-training.

Install dependencies

For the challenge, we will need two significant dependencies: braindecode and eegdash. The libraries will install PyTorch, Pytorch Audio, Scikit-learn, MNE, MNE-BIDS, and many other packages necessary for the many functions.

Install dependencies on colab or your local machine, as eegdash have braindecode as a dependency. you can just run

pip install eegdash.

Imports and setup

from pathlib import Path

import torch

from braindecode.datasets import BaseConcatDataset

from braindecode.preprocessing import (

preprocess,

Preprocessor,

create_windows_from_events,

)

from braindecode.models import EEGNeX

from torch.utils.data import DataLoader

from sklearn.model_selection import train_test_split

from sklearn.utils import check_random_state

from typing import Optional

from torch.nn import Module

from torch.optim.lr_scheduler import LRScheduler

from tqdm import tqdm

import copy

#

Check GPU availability

Identify whether a CUDA-enabled GPU is available and set the device accordingly. If using Google Colab, ensure that the runtime is set to use a GPU. This can be done by navigating to Runtime > Change runtime type and selecting GPU as the hardware accelerator.

device = "cuda" if torch.cuda.is_available() else "cpu"

if device == "cuda":

msg = "CUDA-enabled GPU found. Training should be faster."

else:

msg = (

"No GPU found. Training will be carried out on CPU, which might be "

"slower.\n\nIf running on Google Colab, you can request a GPU runtime by"

" clicking\n`Runtime/Change runtime type` in the top bar menu, then "

"selecting 'T4 GPU'\nunder 'Hardware accelerator'."

)

print(msg)

#

No GPU found. Training will be carried out on CPU, which might be slower.

If running on Google Colab, you can request a GPU runtime by clicking

`Runtime/Change runtime type` in the top bar menu, then selecting 'T4 GPU'

under 'Hardware accelerator'.

What are we decoding?

To start to talk about what we want to analyse, the important thing is to understand some basic concepts.

The brain decodes the problem

Broadly speaking, here brain decoding is the following problem: given brain time-series signals \(X \in \mathbb{R}^{C \times T}\) with labels \(y \in \mathcal{Y}\), we implement a neural network \(f\) that decodes/translates brain activity into the target label.

We aim to translate recorded brain activity into its originating stimulus, behavior, or mental state, King, J-R. et al. (2020).

The neural network \(f\) applies a series of transformation layers (e.g.,

torch.nn.Conv2d,torch.nn.Linear,torch.nn.ELU,torch.nn.BatchNorm2d) to the data to filter, extract features, and learn embeddings relevant to the optimization objective—in other words:\[f_{\theta}: X \to y,\]where \(C\) (

n_chans) is the number of channels/electrodes and \(T\) (n_times) is the temporal window length/epoch size over the interval of interest. Here, \(\theta\) denotes the parameters learned by the neural network.Input/Output definition

For the competition, the HBN-EEG (Healthy Brain Network EEG Datasets) dataset has

n_chans = 129with the last channels as a reference channel, and we define the window length asn_times = 200, corresponding to 2-second windows.Your model should follow this definition exactly; any specific selection of channels, filtering, or domain-adaptation technique must be performed within the layers of the neural network model.

In this tutorial, we will use the

EEGNeXmodel frombraindecodeas an example. You can use any model you want, as long as it follows the input/output definitions above.

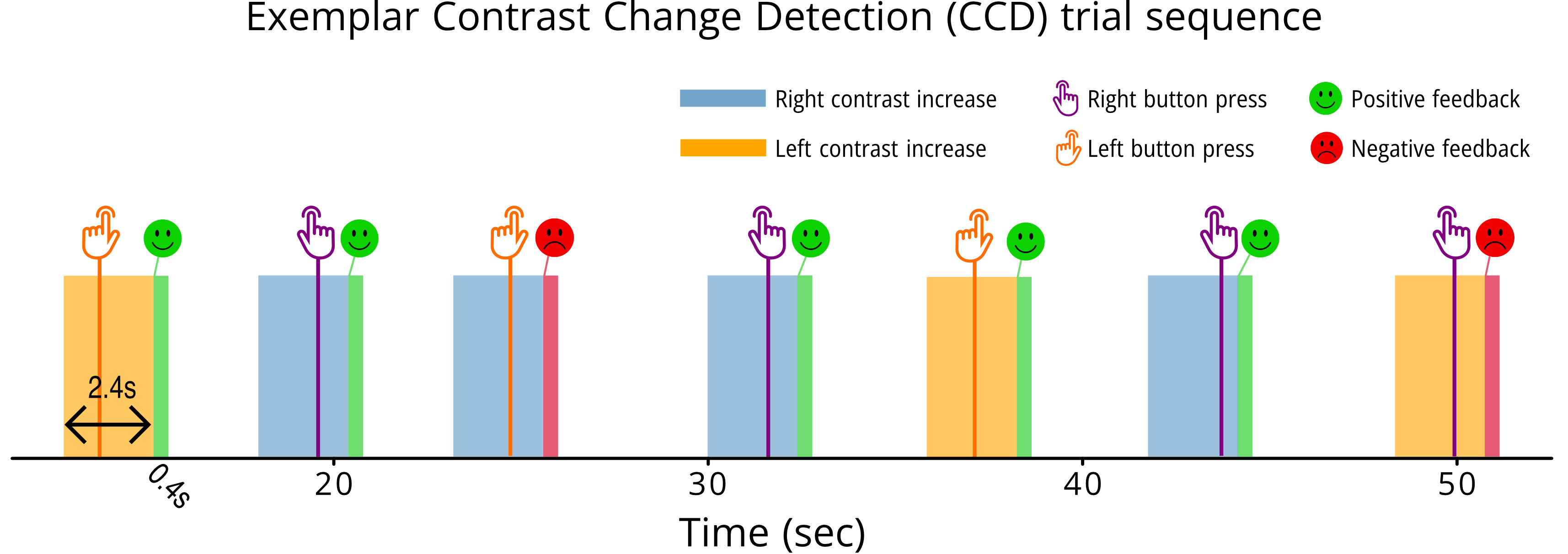

Understand the task: Contrast Change Detection (CCD)

If you are interested to get more neuroscience insight, we recommend these two references, HBN-EEG and Langer, N et al. (2017). Your task (label) is to predict the response time for the subject during this windows.

In the Video, we have an example of recording cognitive activity:

The Contrast Change Detection (CCD) task relates to Steady-State Visual Evoked Potentials (SSVEP) and Event-Related Potentials (ERP).

Algorithmically, what the subject sees during recording is:

Two flickering striped discs: one tilted left, one tilted right.

After a variable delay, one disc’s contrast gradually increases while the other decreases.

They press left or right to indicate which disc got stronger.

They receive feedback (🙂 correct / 🙁 incorrect).

The task parallels SSVEP and ERP:

The continuous flicker tags the EEG at fixed frequencies (and harmonics) → SSVEP-like signals.

The ramp onset, the button press, and the feedback are time-locked events that yield ERP-like components.

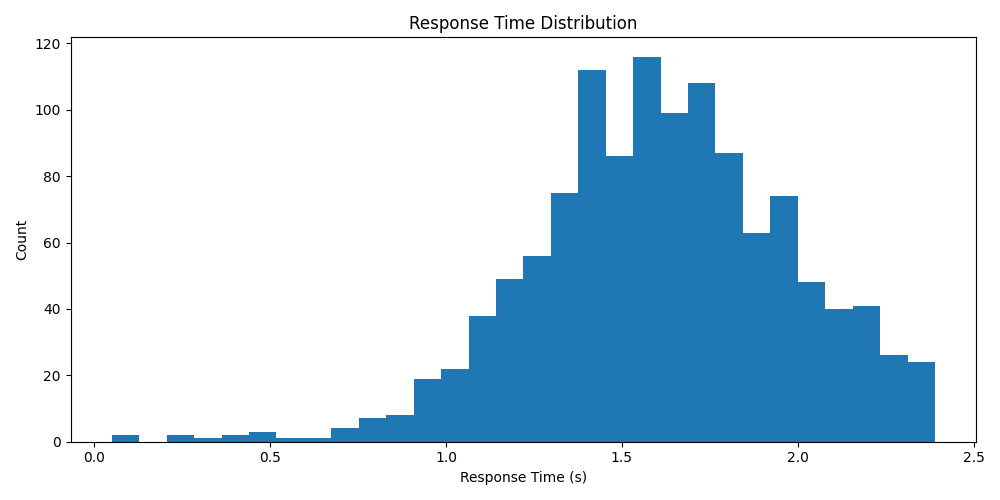

Your task (label) is to predict the response time for the subject during this windows.

In the figure below, we have the timeline representation of the cognitive task:

Stimulus demonstration

PyTorch Dataset for the competition

Now, we have a Pytorch Dataset object that contains the set of recordings for the task contrastChangeDetection.

from eegdash.dataset import EEGChallengeDataset

from eegdash.hbn.windows import (

annotate_trials_with_target,

add_aux_anchors,

keep_only_recordings_with,

add_extras_columns,

)

from eegdash.paths import get_default_cache_dir

# Match tests' cache layout under ~/eegdash_cache/eeg_challenge_cache

DATA_DIR = Path(get_default_cache_dir()).resolve()

DATA_DIR.mkdir(parents=True, exist_ok=True)

dataset_ccd = EEGChallengeDataset(

task="contrastChangeDetection", release="R5", cache_dir=DATA_DIR, mini=True

)

# The dataset contains 20 subjects in the minirelease, and each subject has multiple recordings

# (sessions). Each recording is represented as a dataset object within the `dataset_ccd.datasets` list.

print(f"Number of recordings in the dataset: {len(dataset_ccd.datasets)}")

print(

f"Number of unique subjects in the dataset: {dataset_ccd.description['subject'].nunique()}"

)

#

# This dataset object have very rich Raw object details that can help you to

# understand better the data. The framework behind this is braindecode,

# and if you want to understand in depth what is happening, we recommend the

# braindecode github itself.

#

# We can also access the Raw object for visualization purposes, we will see just one object.

raw = dataset_ccd.datasets[0].raw # get the Raw object of the first recording

# And to download all the data all data directly, you can do:

dataset_ccd.download_all(n_jobs=-1)

#

╭────────────────────── EEG 2025 Competition Data Notice ──────────────────────╮

│ This object loads the HBN dataset that has been preprocessed for the EEG │

│ Challenge: │

│ * Downsampled from 500Hz to 100Hz │

│ * Bandpass filtered (0.5-50 Hz) │

│ │

│ For full preprocessing applied for competition details, see: │

│ https://github.com/eeg2025/downsample-datasets │

│ │

│ The HBN dataset have some preprocessing applied by the HBN team: │

│ * Re-reference (Cz Channel) │

│ │

│ IMPORTANT: The data accessed via `EEGChallengeDataset` is NOT identical to │

│ what you get from EEGDashDataset directly. │

│ If you are participating in the competition, always use │

│ `EEGChallengeDataset` to ensure consistency with the challenge data. │

╰──────────────────────── Source: EEGChallengeDataset ─────────────────────────╯

Number of recordings in the dataset: 60

Number of unique subjects in the dataset: 20

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 11.0B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.3B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.8B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.5B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.4B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.3B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 11.6B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.7B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.9B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.9B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.2B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.9B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.80B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.80B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.67B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.66B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.54B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.54B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.50B/s]

Downloading sub-NDARAH793FBF_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.50B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.2B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 11.8B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.6B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 10.6B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 11.4B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 8.80B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.9B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.1B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.0B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.2B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.1B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.0B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.12B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.10B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.66B/s]

Downloading sub-NDARAJ689BVN_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.65B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 2.99B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 2.99B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.51B/s]

Downloading sub-NDARAP785CTE_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.51B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.3B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.3B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.9B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.8B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 10.3B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.1B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.8B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.4B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.8B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.3B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.9B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.3B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.87B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.87B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.29B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.28B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.54B/s]

Downloading sub-NDARAU708TL8_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.54B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.17B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.17B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.7B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 11.1B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.6B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.4B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.5B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.2B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.0B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.1B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.4B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.0B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.2B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.8B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.37B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.95B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.36B/s]

Downloading sub-NDARBE091BGD_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.92B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.04B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.04B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.19B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.19B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.4B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.6B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 10.2B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.6B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.7B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.0B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 9.05B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.3B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.3B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.8B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.8B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.0B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.27B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.27B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.11B/s]

Downloading sub-NDARBE103DHM_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.11B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.24B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.24B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.4B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.1B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.06B/s]

Downloading sub-NDARBF851NH6_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.06B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.6B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.4B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.9B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.9B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.4B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.3B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.8B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.2B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.0B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.9B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.85B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.84B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 2.50B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 2.49B/s]

Downloading sub-NDARBH228RDW_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 5.70B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.6B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.26B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.26B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.0B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.7B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.0B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.2B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.3B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 9.20B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.1B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 9.76B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.4B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.2B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.2B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.80B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.79B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.79B/s]

Downloading sub-NDARBJ674TVU_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.79B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.1B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.87B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.87B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.37B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.36B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.4B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.3B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.8B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 11.7B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.6B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.7B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.7B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 11.2B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 11.5B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.9B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.7B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.60B/s]

Downloading sub-NDARBM433VER_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.60B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 5.17B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.19B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.18B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.5B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 11.6B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.76B/s]

Downloading sub-NDARCA740UC8_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.75B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.5B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.5B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.5B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 10.1B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.7B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.6B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.7B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 9.68B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 9.67B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 5.17B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.0B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.04B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.03B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.6B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.42B/s]

Downloading sub-NDARCU633GCZ_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.41B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.4B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.04B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.04B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.4B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 11.4B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.9B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.1B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.7B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 11.3B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.1B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 11.1B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.6B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.80B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.79B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.8B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.26B/s]

Downloading sub-NDARCU736GZ1_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.26B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 8.06B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.2B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 2.15B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 2.14B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.20B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.18B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.4B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.2B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.3B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.0B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.6B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.7B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.0B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 9.78B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.41B/s]

Downloading sub-NDARCU744XWL_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.40B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.2B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.2B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.13B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.13B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.7B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.1B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.40B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.39B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.23B/s]

Downloading sub-NDARDC843HHM_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.23B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.4B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.9B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.3B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.0B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.2B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.2B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.2B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.4B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.49B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.48B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.6B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.7B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.37B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.37B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.9B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.3B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.53B/s]

Downloading sub-NDARDH086ZKK_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.52B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.5B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 2.87B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 2.87B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.8B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.1B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 10.8B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.7B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.6B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.1B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.4B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.6B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.4B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.58B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.58B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.0B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.33B/s]

Downloading sub-NDARDL305BT8_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.33B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.60B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.59B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.77B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 1.77B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.0B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 10.5B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 9.55B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.0B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.8B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.8B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.1B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.5B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.5B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.7B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.66B/s]

Downloading sub-NDARDU853XZ6_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.66B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.8B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-1_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-1_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.6B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.23B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.23B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.46B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.45B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-1_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-2_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.84B/s]

Downloading sub-NDARDV245WJG_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 3.83B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-1_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.8B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-3_channels.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-2_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 11.7B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-3_channels.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 8.53B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-1_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-2_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-1_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.3B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-2_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 9.99B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-3_events.tsv: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-3_events.tsv: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.1B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-1_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-2_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-2_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 13.3B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-3_eeg.json: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-3_eeg.json: 100%|██████████| 1.00/1.00 [00:00<00:00, 12.3B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-2_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 2.79B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-1_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 2.79B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-3_eeg.bdf: 0%| | 0.00/1.00 [00:00<?, ?B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.42B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-2_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.42B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.01B/s]

Downloading sub-NDAREC480KFA_task-contrastChangeDetection_run-3_eeg.bdf: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.00B/s]

Alternatives for Downloading the data

You can also perform this operation with wget or the aws cli. These options will probably be faster! Please check more details in the HBN data webpage HBN-EEG. You need to download the 100Hz preprocessed data in BDF format.

- Example of wget for release R1

wget https://sccn.ucsd.edu/download/eeg2025/R1_L100_bdf.zip -O R1_L100_bdf.zip

Example of AWS CLI for release R1

aws s3 sync s3://nmdatasets/NeurIPS25/R1_L100_bdf data/R1_L100_bdf –no-sign-request

#

Create windows of interest

So we epoch after the stimulus moment with a beginning shift of 500 ms.

EPOCH_LEN_S = 2.0

SFREQ = 100 # by definition here

transformation_offline = [

Preprocessor(

annotate_trials_with_target,

target_field="rt_from_stimulus",

epoch_length=EPOCH_LEN_S,

require_stimulus=True,

require_response=True,

apply_on_array=False,

),

Preprocessor(add_aux_anchors, apply_on_array=False),

]

preprocess(dataset_ccd, transformation_offline, n_jobs=1)

ANCHOR = "stimulus_anchor"

SHIFT_AFTER_STIM = 0.5

WINDOW_LEN = 2.0

# Keep only recordings that actually contain stimulus anchors

dataset = keep_only_recordings_with(ANCHOR, dataset_ccd)

# Create single-interval windows (stim-locked, long enough to include the response)

single_windows = create_windows_from_events(

dataset,

mapping={ANCHOR: 0},

trial_start_offset_samples=int(SHIFT_AFTER_STIM * SFREQ), # +0.5 s

trial_stop_offset_samples=int((SHIFT_AFTER_STIM + WINDOW_LEN) * SFREQ), # +2.5 s

window_size_samples=int(EPOCH_LEN_S * SFREQ),

window_stride_samples=SFREQ,

preload=True,

)

# Injecting metadata into the extra mne annotation.

single_windows = add_extras_columns(

single_windows,

dataset,

desc=ANCHOR,

keys=(

"target",

"rt_from_stimulus",

"rt_from_trialstart",

"stimulus_onset",

"response_onset",

"correct",

"response_type",

),

)

#

/home/runner/work/EEGDash/EEGDash/.venv/lib/python3.11/site-packages/braindecode/preprocessing/windowers.py:630: UserWarning: Dropping extra columns that conflict with windowing metadata: {'target'}

warnings.warn(

/home/runner/work/EEGDash/EEGDash/.venv/lib/python3.11/site-packages/braindecode/preprocessing/windowers.py:630: UserWarning: Dropping extra columns that conflict with windowing metadata: {'target'}

warnings.warn(

/home/runner/work/EEGDash/EEGDash/.venv/lib/python3.11/site-packages/braindecode/preprocessing/windowers.py:630: UserWarning: Dropping extra columns that conflict with windowing metadata: {'target'}

warnings.warn(

/home/runner/work/EEGDash/EEGDash/.venv/lib/python3.11/site-packages/braindecode/preprocessing/windowers.py:630: UserWarning: Dropping extra columns that conflict with windowing metadata: {'target'}

warnings.warn(

/home/runner/work/EEGDash/EEGDash/.venv/lib/python3.11/site-packages/braindecode/preprocessing/windowers.py:630: UserWarning: Dropping extra columns that conflict with windowing metadata: {'target'}

warnings.warn(

/home/runner/work/EEGDash/EEGDash/.venv/lib/python3.11/site-packages/braindecode/preprocessing/windowers.py:630: UserWarning: Dropping extra columns that conflict with windowing metadata: {'target'}

warnings.warn(

/home/runner/work/EEGDash/EEGDash/.venv/lib/python3.11/site-packages/braindecode/preprocessing/windowers.py:630: UserWarning: Dropping extra columns that conflict with windowing metadata: {'target'}

warnings.warn(

/home/runner/work/EEGDash/EEGDash/.venv/lib/python3.11/site-packages/braindecode/preprocessing/windowers.py:630: UserWarning: Dropping extra columns that conflict with windowing metadata: {'target'}

warnings.warn(

/home/runner/work/EEGDash/EEGDash/.venv/lib/python3.11/site-packages/braindecode/preprocessing/windowers.py:630: UserWarning: Dropping extra columns that conflict with windowing metadata: {'target'}

warnings.warn(

/home/runner/work/EEGDash/EEGDash/.venv/lib/python3.11/site-packages/braindecode/preprocessing/windowers.py:630: UserWarning: Dropping extra columns that conflict with windowing metadata: {'target'}

warnings.warn(

/home/runner/work/EEGDash/EEGDash/.venv/lib/python3.11/site-packages/braindecode/preprocessing/windowers.py:630: UserWarning: Dropping extra columns that conflict with windowing metadata: {'target'}

warnings.warn(

/home/runner/work/EEGDash/EEGDash/.venv/lib/python3.11/site-packages/braindecode/preprocessing/windowers.py:630: UserWarning: Dropping extra columns that conflict with windowing metadata: {'target'}

warnings.warn(